Date: 09.09.2025

Apple M1 16Gb Unified RAM

Limitations

- Apple MPS still about in beta in PyTorch

Test environment

- MBP 16 inch 2021

- Mac OS 15.5

- Python 3.11

MacOS preparation

brew install python@3.11

Dry-run!

Stable Diffusion v1.5

- Preapre python environment for MPS:

mkdir -p ~/llm && cd ~/llm

python3.11 -m venv _venv_llm

source ./_venv_llm/bin/activate

python -m pip install --upgrade pip

pip install torch torchvision torchaudio

pip install transformers accelerate diffusers safetensors

python3 -c "import torch; print(torch.__version__); print(torch.backends.mps.is_available());print(torch.backends.mps.is_built());"

- Get the StableDiffusion 1.5

git lfs install

git clone https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5 sd1.5

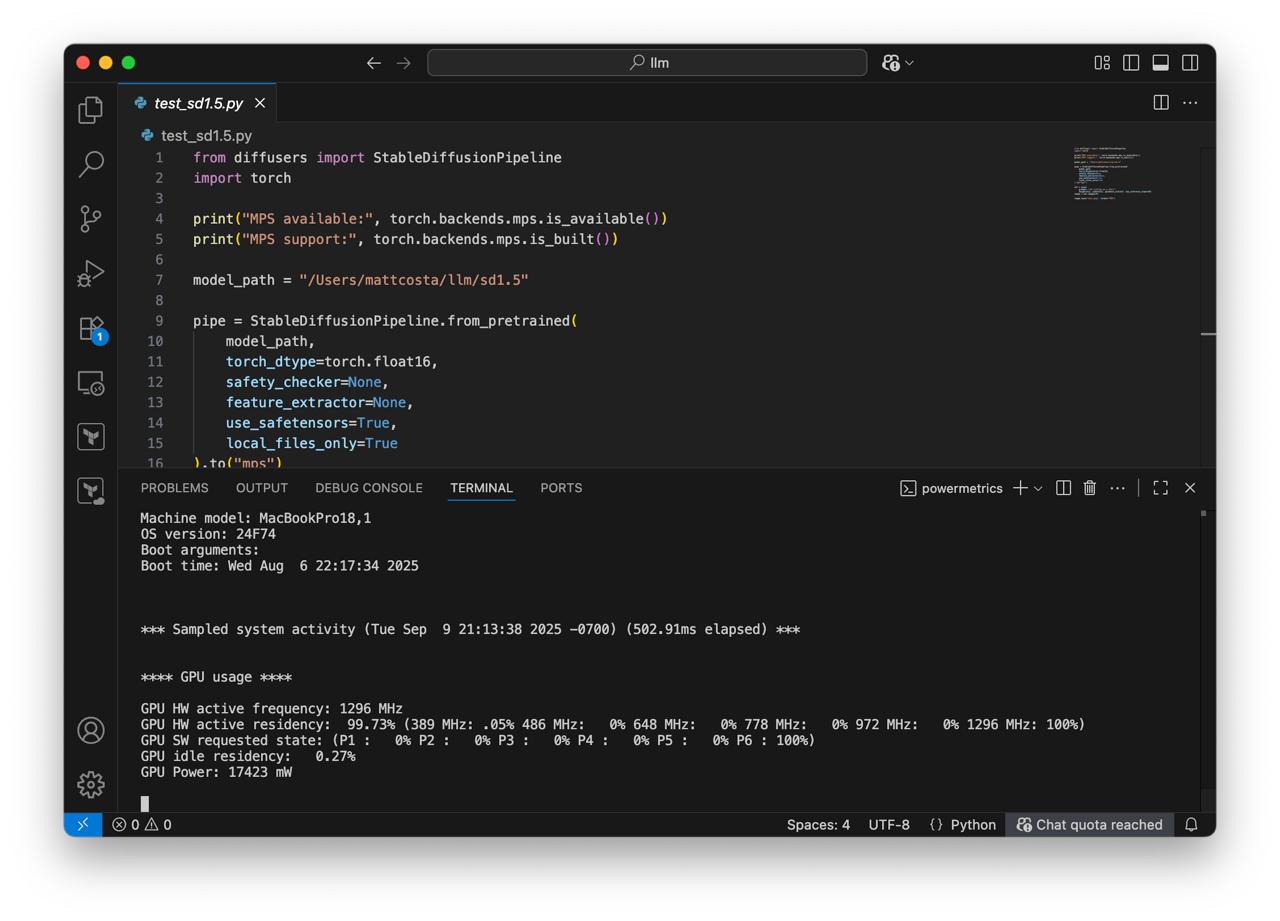

- Create script test_mps_sd1.5.py:

from diffusers import StableDiffusionPipeline

import torch

print("MPS available:", torch.backends.mps.is_available())

print("MPS support:", torch.backends.mps.is_built())

model_path = "/Users/mattcosta/llm/sd1.5"

pipe = StableDiffusionPipeline.from_pretrained(

model_path,

torch_dtype=torch.float16,

safety_checker=None,

feature_extractor=None,

use_safetensors=True,

local_files_only=True

).to("mps")

out = pipe(

prompt= "cat sitting on a chair",

height=512, width=512, guidance_scale=9, num_inference_steps=80)

image = out.images[0]

image.save("test.png", format="PNG")

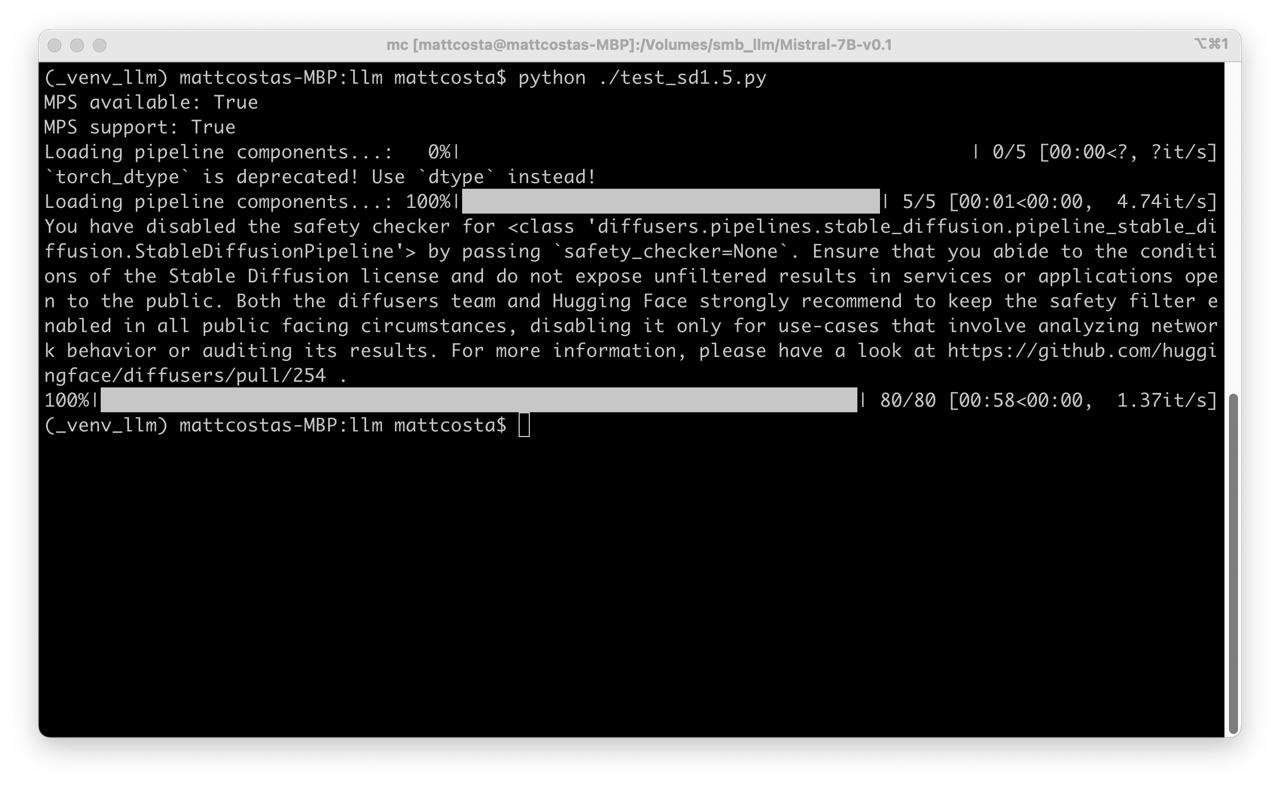

Run test

Check

powermetricsduring each testwhile true; do powermetrics --samplers gpu_power -i500 -n1; sleep 1; done

- Run PyTorch

python ./test_mps_sd1.5.py